Lesson 3 Python Geo and Data Science Packages & Jupyter Notebooks

Lesson 3 Python Geo and Data Science Packages & Jupyter Notebooks mjg83.1 Overview and Checklist

3.1 Overview and Checklist mjg8This lesson introduces a few more Python programming concepts and then focuses on conducting (spatial) data science projects in Python with the help of Jupyter Notebooks. In the process, you will get to know quite a few more useful Python packages and 3rd-party APIs including pandas, GDAL/OGR, and the Esri ArcGIS for Python API.

Please refer to the Calendar for specific time frames and due dates. To finish this lesson, you must complete the activities listed below.

| Step | Activity | Access/Directions |

|---|---|---|

| 1 | Engage with Lesson 3 Content | Begin with 3.2 Installing the required packages for this lesson |

| 3 | Programming Assignment and Reflection | Submit your code for the programming assignment, write-up with reflections, and code explanation |

| 4 | Quiz 3 | Complete the Lesson 3 Quiz |

| 5 | Questions/Comments | Remember to visit the Lesson 3 Discussion Forum to post/answer any questions or comments pertaining to Lesson 3 |

3.2 Installing the required packages for this lesson

3.2 Installing the required packages for this lesson jmk649This lesson will require quite a few different Python packages. We will take care of this task right away so that you then won't have to stop for installations when working through the lesson content. We will use our Anaconda installation from Lesson 2 and create a fresh Python environment within it. In principle, you could perform all the installations with a number of conda installation commands from the command line. However, there are a lot of dependencies between the packages, and it is easy to run into some conflicts that are difficult to resolve. Therefore, we provide a YAML (.yml) file that lists all the packages we want in the environment with the exact version and build numbers we need. We create two new environments by importing the .yml files using conda in the command line interface ("Anaconda Prompt").

The first environment (ACP3_NBK) will be for the Assignment and will contain the packages needed. The second environment (ACP3_WKLTGH) will be for the walkthrough and is optional. This environment will contain the packages needed for the species distribution walkthrough if you want to follow along. For reference, we also provide the conda commands used to create this environment at the end of this section and a separate section with some troubleshooting steps you can try if you are unable to get a working environment.

One of the packages we will be working with in this lesson is the ESRI ArcGIS for Python API, which will require a special approach to authenticate with your PSU login. You will see this approach further down below, and it will then be explained further in Section 3.10.

Creating the ACP3_NBK Anaconda Python environment

Please follow the steps below and let us know if you run into issues.

1) Download the .zip file containing the .yml files from this link: ACP3_YML.zip, then extract the file .yml's it contains. There are 4 files included in this .zip. One (ACP3_NBK) for the Assignment and one (ACP3_WLKTGH) with packages needed for the Species distribution walkthrough. Two files having "_Clean" at the end that are provided as alternatives if the using the more explicit yml does not create a working environment. You may want to have a quick look at the content of the ACP3_NBK.yml text file to see how, among other things, it lists the names of all packages for this environment with version and build numbers. Using a YAML file greatly speeds up the creation of the environment as the files are downloaded and dependencies don't need to be resolved on the fly by conda.

2) Open the program called "Anaconda Prompt" located in the start menu Anaconda Program folder, which was created during the Anaconda installation from Lesson 2.

3) Make sure you have at least 5GB space on your C: drive (the environment will require around 3.5-4GB). Then type in and run the following conda command to create a new environment called ACP311_NBK (for Anaconda Python 3.11 Notebook) from the downloaded .yml file. You will have to replace the ... to match the name of the .yml file, and adapt the path to the .yml file depending on where you have it stored on your harddisk.

conda env create --name ACP311_NBK -f "C:\489\...\ACP3_NBK.yml"

Conda will now create the environment called ACP3x_NBK (x being 11) according to the package list in the YAML file. This can take quite a lot of time; in particular, it will just say "Solving environment" for quite a while before anything starts to happen. If you want, you can work through the next few sections of the lesson while the installation is running. The first section that will require this new Python environment is Section 3.6. Everything before that can still be done in the ArcGIS environment you used for the first two lessons. When the installation is done, the ACP3x_NBK environment will show up in the environments list in the Anaconda Navigator and will be located at C:\Users\<user name>\Anaconda3\envs\ACP3x_NBK.

4) Let's now do a quick test to see if the new environment works as intended. In the Anaconda Prompt, activate the new environment with the following command (you'll need to activate your environment every time you want to use it):

activate ACP311_NBK

Then type in python and in Python run the following commands; all the modules should import without any error messages:

import pandas import matplotlib from osgeo import gdal import geopandas import shapely import arcgis from arcgis.gis import GIS

Next, let's test connecting to ArcGIS Online with the ArcGIS for Python API mentioned at the beginning. Run the following Python command:

gis = GIS('https://pennstate.maps.arcgis.com', client_id='fuFmRsy8iyntv3s2')Now a browser window should open up where you have to authenticate with your PSU login credentials (unless you are already logged in to Penn State). After authenticating successfully, you will get a window saying "OAuth2 Approval" and a box with a very long token at the bottom. In the Anaconda Prompt window, you will see a prompt saying "Enter token obtained on signing in using SAML:". Use CTRL+A and CTRL+C to copy the entire code, and then do a right-click with the mouse to paste the code into the Anaconda Prompt window. The code won't show up, so just continue by pressing Enter.

If you are having troubles with this step, Figure 3.18 in Section 3.10 illustrates the steps. You may get a short warning message (InsecureRequestWarning) but as long as you don't get a long error message, everything should be fine. You can test this by running this final command:

print(gis.users.me)

This should produce an output string that includes your pennstate ArcGIS Online username, so e.g., <User username:xyz12_pennstate>. More details on this way of connecting with ArcGIS Online will be provided in Section 3.10.

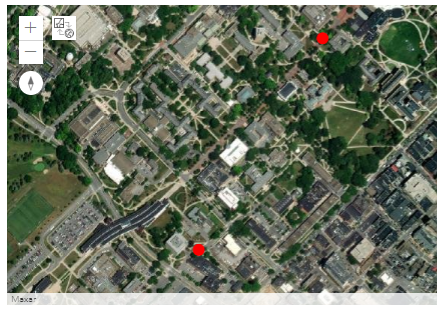

Testing the gis widgetsnbextension

Download this small Jupyter Notebook and run it by following the steps in Section 3.6.1. Be sure to enter your psu username in the gis.users.get(...) method or use gis.users.me from above. If successful, you should see the map displayed with Penn State University and your widgetsnbextension package is working correctly.

If it fails to show, then we will need to do some validation of several of the packages in the environment and the process is outlined in section 3.2.1.

If creating the environment from the .yml file did NOT work:

As we wrote above, importing the .yml file with the complete package and version number list is probably the most reliable method to set up an exact clone of a Python environment for this lesson but there have been cases in the past where using this approach failed on some systems. Sometimes conda can fail to resolve the dependencies and removing some of the obscure packages from the .yml can help. Repeat the steps from above starting at step 2 using the version of the yml that has "_Clean". This yml only contains the main packages and sets the required version on the most important packages. If that does not work, you can try the troubleshooting steps outlined in section 3.2.1 or try creating the environment from scratch. Let your instructor know if you have reached this point and have not been able to get a working env.

Building from the Commandline

Maybe you are interested in the steps that were taken to create the environment from scratch. We therefore list the conda commands used from the Anaconda Prompt for reference below.

1) Create a new conda Python 3.11 environment called ACP311_NBK with some of the most critical packages:

conda create -n ACP311_NBK -c esri -c conda-forge python=3.11 nodejs arcgis=2.4.0.1 arcgis-mapping gdal geopandas matplotlib jupyter ipywidgets widgetsnbextension=4.0.13

2) As we did in Lesson 2, we activate the new environment using:

activate ACP311_NBK

3) Then we add the remaining packages:

conda install -c rpy2 maptools geopandas

4) Once we have made sure that everything is working correctly in this new environment, we can export a YAML file similar to the one we have been using in the first part above using the command:

conda env export > "C:\<output path>\ACP311_NBK.yml

Creating the ACP311_WLKTGH Anaconda Python environment

As stated above, this environment will contain packages used for the species distribution walkthrough in section 3.6. Be sure to get the ACP3_NBK environment working first before attempting this one. This environment contains more packages and increases the complexity of the dependency tree solving.

1) Using the ACP3_WLKTGH.yml file from the .zip file that we downloaded, follow steps 2 and 3 from above but switch ACP3_NBK to ACP3_WLKTGH

Let's now do a quick test to see if the new environment works as intended. In the Anaconda Prompt, activate the new environment with the following command (you'll need to activate your environment every time you want to use it):

activate ACP311_WLKTGH

Then type in python and in Python run the following commands; all the modules should import without any error messages:

import arcgis import bs4 import pandas import cartopy import matplotlib from osgeo import gdal import geopandas import rpy2 import shapely

If creating the environment from the .yml file did NOT work:

NOTE that this is only for walkthrough and is not required for the assignment. It is only needed if you want to execute the walkthrough code on your own. Before creating this environment, ensure that your Notebook environment for the assignment from above is working.

As we wrote above, repeat the steps from above starting at step 2 using the yml with the suffix _Clean. If that does not work, you can try the troubleshooting steps outlined in section 3.2.1 or try creating the environment from scratch. Let your instructor know if you have reached this point and have not been able to get a working env.

Potential issues

There is a small chance that the from osgeo import gdal will throw an error about DLLs not being found on the path which looks like the below:

During handling of the above exception, another exception occurred:

Traceback (most recent call last): File "<stdin>", line 1, in <module> File "C:\Users\professor\anaconda3\envs\AC3x\lib\site-packages\osgeo\__init__.py", line 46, in <module> _gdal = swig_import_helper() File "C:\Users\professor\anaconda3\envs\AC3x\lib\site-packages\osgeo\__init__.py", line 42, in swig_import_helper raise ImportError(traceback_string + '\n' + msg) ImportError: Traceback (most recent call last): File "C:\Users\professor\anaconda3\envs\AC3x\lib\site-packages\osgeo\__init__.py", line 30, in swig_import_helper return importlib.import_module(mname) File "C:\Users\professor\anaconda3\envs\AC3P\lib\importlib\__init__.py", line 127, in import_module return _bootstrap._gcd_import(name[level:], package, level) File "<frozen importlib._bootstrap>", line 1014, in _gcd_import File "<frozen importlib._bootstrap>", line 991, in _find_and_load File "<frozen importlib._bootstrap>", line 975, in _find_and_load_unlocked File "<frozen importlib._bootstrap>", line 657, in _load_unlocked File "<frozen importlib._bootstrap>", line 556, in module_from_spec File "<frozen importlib._bootstrap_external>", line 1166, in create_module File "<frozen importlib._bootstrap>", line 219, in _call_with_frames_removed ImportError: DLL load failed while importing _gdal: The specified module could not be found.

On Windows, with Python >= 3.8, DLLs are no longer imported from the PATH. If gdalXXX.dll is in the PATH, then set the USE_PATH_FOR_GDAL_PYTHON=YES environment variable to feed the PATH into os.add_dll_directory().

In the event this happens the fix is to (every time you want to import gdal you would need to do this):

import os os.environ["USE_PATH_FOR_GDAL_PYTHON"]="YES" from osgeo import gdal

It's possible the above fix doesn't work and the error is still thrown which will require checking the PATH environment variable in the Anaconda Prompt by typing "path" and checking that c:\osgeo4w\bin or osgeo4w64\bin is in the list and if not, either add it using set path=%PATH%;c:\osgeo4w\bin or going to the System Properties -> Environment Variables -> System Variables -> Path and add it to the list. Sometimes paths to these \bin directories reference old versions or versions that were uninstalled and cause import errors. Keeping the paths updated within your System Environment can help avoid errors like these. Verify that the paths listed here are valid by navigating to them in Windows Explorer. If you have (or had) mixed 32-bit and 64-bit QGIS applications on your PC at some point, it may be worth cleaning the older, unused versions from your pc.

3.2.1 Conda Environment Troubleshooting

3.2.1 Conda Environment Troubleshooting jmk649The conda-libmamba-solver is an alternative dependency solver for the Conda package manager that uses the libmamba library, designed to resolve package dependencies more efficiently and quickly than the default Conda solver. There is a good comparison to the classic solver documented here if you want to compare the two. You can check your environment by using the conda info command.

The libmamba solver is particularly useful when dealing with complex environments that involve many dependencies, as it can reduce environment creation time by using advanced optimization techniques. conda-libmamba-solver is distributed as a separate package, available on both conda-forge and defaults. The plugin needs to be present in the same environment you use conda from and according to the mamba documentation page, ships with conda and should be in your base environment. If it is not already installed, you can install it with your base environment activated, run this command:

conda install -n base conda-libmamba-solver

Once you’ve installed the conda-libmamba-solver, you can explicitly tell Conda to use it for environment creation and package installation by passing the --solver option with the value libmamba. Here are a few steps to create an environment using conda-libmamba-solver:

conda create --name ACP3 python=3.11 arcgis=2.3.1 numpy pandas --solver=libmamba

Or with using the yaml in the same format as we tried before:

conda create --name ACP3 -f "c:\path to\ACP3.yaml" --solver=libmamba

You'll also notice that the logging is more robust and informative to why the combination of packages may not have resolved instead of just saying "Solving Failed" with no context. You can use this information to change package versions to find a working combination. The less specific package versions you can work with, the more likelihood the environment will resolve. Sometimes it's beneficial to only set the main packages versions and let the solver figure out the rest.

If you prefer the conda-libmamba-solver solver to the conda solver, you can update the conda environment to use the conda-libmamba-solver by default. To do this, refer to the documentation on conda-libmamba-solver

Conda Clean

Note! Always make sure to use conda clean cautiously, as it might remove files that are needed. Review the command's output carefully to ensure it's not deleting anything important. If you're unsure, it's a good idea to ask for help or research more about the specific files it's deleting.

conda clean is a command provided by the conda package manager that helps maintain your environment by freeing up space. It removes unnecessary files and caches that could take up disk space in your conda environment. The command includes functionality to clean specific types of files:

- conda clean --packages: Removes unused packages

- conda clean --tarballs: Removes .tar files which have been extracted and are no longer needed

- conda clean --index-cache: Removes index cache which tends to speed up package lookup but can potentially become very large

- conda clean --source-cache: Removes source cache files. These files are generally required for building packages, once a package is built they are not used.

- conda clean --all: Combines all of the above.

If a conda environment install fails, there may be corrupted or incorrect packages left in the package cache. These can prevent you from creating new conda environments, or make your existing ones behave erratically. conda clean --packages can help by removing these unused packages; this will force conda to re-download them the next time it needs those packages. For instance, you may have an incomplete package that was downloaded due to a network error. The command conda clean --packages will remove the incomplete package from cache. The next time you try to create the environment, conda will attempt to download the package again, this time hopefully without any network issues.

Map fails to display - widgetsnbextension

If the map did not display with the test Notebook, (in the browser - not your IDE) we need to check if the environment contains a compatible widgetsnbextension version. In the Python Command window with the AC311_NBK environment activated, execute a conda list and verify that the widgetsnbextension has 'esri' and not 'conda-forge' or 'default'.

arcgis 2.4.0.1 py311_103 esri

arcgis-mapping 4.30.0 py_305 esri

...

widgetsnbextension 4.0.13 py_0 esri

If this channel is different, we need to uninstall widgetsnbextension and explicitly install from the esri channel by executing conda remove widgetsnbextension, and conda install -c esri widgetsnbextension. This may uninstall, update, and install other packages, which is ok. Once this is resolved, try the sample again. If this fails, let your instructor know.

3.3 Regular Expressions

3.3 Regular Expressions jmk649To start off Lesson 3, we want to talk about a situation that you regularly encounter in programming: Often you need to extract part of string or all strings that match a particular pattern among a given set of strings, but they are not able to be extracted by slicing.

For instance, you may have a list of person names and need to extract all names whose last name starts with the letter ‘J’. Or, you want to do something with all files in a folder whose names contain the sequence of numbers “154” and that have the file extension “.shp”. Or you want to find all occurrences where the word “red” is followed by the word “green” with at most two words in between in a longer text string. These would be difficult to achieve through slicing and would result in lengthy code that needs to account for all of the fringe cases.

Support for these kinds of matching tasks is available in most programming languages based on an approach for denoting string patterns that is called regular expressions.

A regular expression is a string in which certain characters like '.', '*', '(', ')', etc.and certain combinations of characters are given special meanings to represent other characters and sequences of other characters. Surely you have already seen the expression “*.txt” to stand for all files with arbitrary names but ending in “.txt”.

To give you another example before we approach this topic more systematically, the following regular expression “a.*b” in Python stands for all strings that start with the character ‘a’ followed by an arbitrary sequence of characters, followed by a ‘b’. The dot here represents all characters and the star stands for an arbitrary number of repetitions. Therefore, this pattern would, for instance, match the strings 'acb', 'acdb', 'acdbb', etc.

Regular expressions like these can be used in functions provided by the programming language that, for instance, compare the expression to another string and then determine whether that string matches the pattern from the regular expression or not. Using such a function and applying it to, for example, a list of person names or file names allows us to perform some task only with those items from the list that match the given pattern.

In Python, the package from the standard library that provides support for regular expressions together with the functions for working with regular expressions is simply called “re”. The function for comparing a regular expression to another string and telling us whether the string matches the expression is called match(...). Let’s create a small example to learn how to write regular expressions. In this example, we have a list of names in a variable called personList, and we loop through this list comparing each name to a regular expression given in variable pattern and printout the name if it matches the pattern.

import re

personList = ['Julia Smith', 'Francis Drake', 'Michael Mason',

'Jennifer Johnson', 'John Williams', 'Susanne Walker',

'Kermit the Frog', 'Dr. Melissa Franklin', 'Papa John',

'Walter John Miller', 'Frank Michael Robertson', 'Richard Robertson',

'Erik D. White', 'Vincent van Gogh', 'Dr. Dr. Matthew Malone',

'Rebecca Clark']

pattern = "John"

for person in personList:

if re.match(pattern, person):

print(person) Output: John Williams

Before we try out different regular expressions with the code above, we want to mention that the part of the code following the name list is better written in the following way:

pattern = "John"

compiledRE = re.compile(pattern)

for person in personList:

if compiledRE.match(person):

print(person) Whenever we call a function from the “re” module like match(…) and provide the regular expression as a parameter to that function, the function will do some preprocessing of the regular expression and compile it into some data structure that allows for matching strings to that pattern efficiently. If we want to match several strings to the same pattern, as we are doing with the for-loop here, it is more time efficient to explicitly perform this preprocessing and store the compiled pattern in a variable, and then invoke the match(…) method of that compiled pattern. In addition, explicitly compiling the pattern allows for providing additional parameters, e.g. when you want the matching to be done in a case-insensitive manner. In the code above, compiling the pattern happens in line 3with the call of the re.compile(…) function and the compiled pattern is stored in variable compiledRE. Instead of the match(…) function, we now invoke the method match(…) of the compiled pattern object in variable person (line 6) that only needs one parameter, the string that should be matched to the pattern. Using this approach, the compilation of the pattern only happens once instead of once for each name from the list as in the first version.

One important thing to know about match(…) is that it always tries to match the pattern to the beginning of the given string, but it allows for the string to contain additional characters after the entire pattern has been matched. That is the reason why when running the code above, the simple regular expression “John” matches “John Williams” but neither “Jennifer Johnson”, “Papa John”, or “Walter John Miller”. You may wonder how you could write a pattern that only matches strings that end in a certain sequence of characters.The answer is that Python's regular expressions use the special characters ^ and $ to represent the beginning or the end of a string and this allows us to deal with such situations as we will see a bit further below.

Now let’s have a look at the different special characters and some examples using them in combination with the name list code from above. Here is a brief overview of the characters and their purpose, grouped by theme:

| Character | Purpose |

|---|---|

| Literal Characters | |

| a, A, 1, etc. | Matches the character itself. |

| Dot (.) | |

| . | Matches any single character except a newline. |

| Anchors | |

| ^ | Matches at the start of the string. |

| $ | Matches at the end of the string. |

| Character Classes | |

| [abc] | Matches any one of the characters between the [] a, b, or c. |

| [a-z] | Matches any one character from a to z. |

| [^abc] | Matches any character except a, b, or c. |

| Predefined Character Classes | |

| \d | Matches any digit (equivalent to [0-9]). |

| \D | Matches any non-digit. |

| \w | Matches any alphanumeric character (equivalent to [a-zA-Z0-9_]). |

| \W | Matches any non-alphanumeric character. |

| \s | Matches any whitespace character (spaces, tabs, line breaks). |

| \S | Matches any non-whitespace character. |

| Quantifiers | |

| * | Matches 0 or more of the preceding element. |

| + | Matches 1 or more of the preceding element. |

| ? | Matches 0 or 1 of the preceding element. |

| {n} | Matches exactly n occurrences of the preceding element. |

| {n,} | Matches n or more occurrences of the preceding element. |

| {n,m} | Matches between n and m occurrences of the preceding element. |

| Groups and Alternation | |

| (...) | Captures the matched substring for later use. |

| (?:...) | Groups without capturing. |

| | | Acts like a logical OR. |

| Escaping Special Characters | |

| \ | Escapes special characters, making them literal such as \- or \| to make - and | match. |

| Assertions | |

| (?=...) | Asserts that what follows the regex is present. |

| (?!...) | Asserts that what follows the regex is not present. |

| (?<=...) | Asserts that what precedes the regex is present. |

| (?<!...) | Asserts that what precedes the regex is not present. |

| Flags | |

| re.I or re.IGNORECASE | Makes the pattern case-insensitive. |

| re.S or re.DOTALL | Allows the dot . to match newline characters. |

| re.M or re.MULTILINE | Allows ^ and $ to match the start and end of each line within the string. |

Since the dot stands for any character, the regular expression “.u” can be used to get all names that have the letter ‘u’ as the second character. Give this a try by using “.u” for the regular expression in line 1 of the code from the previous example.

pattern = ".u"The output will be:

Julia Smith Susanne Walker

Similarly, we can use “..cha” to get all names that start with two arbitrary characters followed by the character sequence resulting in “Michael Mason” and “Richard Robertson” being the only matches. By the way, it is strongly recommended that you experiment a bit in this section by modifying the patterns used in the examples. If in some case you don’t understand the results you are getting, feel free to post this as a question on the course forums.

Maybe you are wondering how one would use the different special characters in the verbatim sense, e.g. to find all names that contain a dot. This is done by putting a backslash in front of them, so \. for the dot, \? for the question mark, and so on. If you want to match a single backslash in a regular expression, this needs to be represented by a double backslash in the regular expression. However, one has to be careful here when writing this regular expression as a string literal in the Python code because of the string escaping mechanism, a sequence of two backslashes will only produce a single backslash in the string character sequence. Therefore, you actually have to use four backslashes, "xyz\\\\xyz"to produce the correct regular expression involving a single backslash. Or you use a raw string in which escaping is disabled, so r"xyz\\xyz". Here is one example that uses \. to search for names with a dot as the third character returning “Dr. Melissa Franklin” and “Dr. Dr. Matthew Malone” as the only results:

pattern = "..\." Next, let us combine the dot (.) with the star (*) symbol that stands for the repetition of the previous character. The pattern “.*John” can be used to find all names that contain the character sequence “John”. The .* at the beginning can match any sequence of characters of arbitrary lengthfrom the . class (so any available character). For Instance, for the name “Jennifer Johnson”, the .* matches the sequence “Jennifer “ produced from nine characters from the . class and since this is followed by the character sequence “John”, the entire name matches the regular expression.

pattern = ".*John"Output: Jennifer Johnson John Williams Papa John Walter John Miller

Please note that the name “John Williams” is a valid match because the * also includes zero occurrences of the preceding character, so “.*John” will also match “John” at the beginning of a string.

The dot used in the previous examples is a special character for representing an entire class of characters, namely any character. It is also possible to define your own class of characters within a regular expression with the help of the squared brackets. For instance, [abco] stands for the class consisting of only the characters ‘a’, ‘b’,‘c’ and ‘o’. When it is used in a regular expression, it matches any of these four characters. So the pattern “.[abco]” can, for instance, be used to get all names that have either ‘a’, ‘b’, ‘c’ or ‘o’ as the second character. This means using ...

pattern = ".[abco]" ... we get the output:

John Williams Papa John Walter John Miller

When defining classes, we can make use of ranges of characters denoted by a hyphen. For instance, the range m-o stands for the lower-case characters ‘m’, ‘n’, ‘o’ . The class [m-oM-O.] would then consist of the characters ‘m’, ‘n’, ‘o’, ‘M’, ‘N’, ‘O’, and ‘.’ . Please note that when a special character appears within the squared brackets of a class definition (like the dot in this example), it is used in its verbatim sense. Try out this idea of using rangeswith the following example:

pattern = "......[m-oM-O.]" The output will be...

Papa John Frank Michael Robertson Erik D. White Dr. Dr. Matthew Malone

… because these are the only names that have a character from the class [m-oM-O.] as the seventh character.

Predefined Classes

In addition to the dot, there are more predefined classes of characters available in Python for cases that commonly appear in regular expressions listed in the Predefined Character Classes section in the table above. For instance, these can be used to match any digit or any non-digit. Predefined classes are denoted by a backslash followed by a particular character, like \d for a single decimal digit, so the characters 0 to 9.

To give one example, the following pattern can be used to get all names in which “John” appears not as a single word but as part of a longer name (either first or last name). This means it is followed by at least one character that is not a whitespace which is represented by the \S in the regular expression used. The only name that matches this pattern is “Jennifer Johnson”.

pattern = ".*John\S" In addition to the *, there are more special characters for denoting certain cases of repetitions of a character or a group. + stands for arbitrarily many occurrences but, in contrast to *, the character or group needs to occur at least once. ? stands for zero or one occurrence of the character or group. That means it is used when a character or sequence of characters is optional in a pattern. Finally, the most general form {m,n} says that the previous character or group needs to occur at least m times and at most n times.

If we use “.+John” instead of “.*John” in an earlier example, we will only get the names that contain “John” but preceded by one or more other characters.

pattern = ".+John" Output: Jennifer Johnson Papa John Walter John Miller

By writing ...

pattern = ".{11,11}[A-Z]" ... we get all names that have an upper-case character as the 12th character. The result will be “Kermit the Frog”. This is a bit easier and less error-prone than writing “………..[A-Z]”.

Lastly, the pattern “.*li?a” can be used to get all names that contain the character sequences ‘la’ or ‘lia’.

pattern = ".*li?a"Output: Julia Smith John Williams Rebecca Clark

So far we have only used the different repetition matching operators *, +, {m,n}, and ? for occurrences of a single specific character. When used after a class, these operators stand for a certain number of occurrences of characters from that class. For instance, the following pattern can be used to search for names that contain a word that only consists of lower-case letters (a-z) like “Kermit the Frog” and “Vincent van Gogh”. We use \s to represent the required whitespaces before and after the word and then [a-z]+ for an arbitrarily long sequence of lower-case letters but consisting of at least one letter.

pattern = ".*\s[a-z]+\s" Sequences of characters can be grouped together with the help of parentheses (…) and then be followed by a repetition operator to represent a certain number of occurrences of that sequence of characters. For instance, the following pattern can be used to get all names where the first name starts with the letter ‘M’ taking into account that names may have a ‘Dr. ’ as prefix. In the pattern, we use the group (Dr.\s) followed by the ? operator to say that the name can start with that group but doesn’t have to. Then we have the upper-case M followed by .*\s to make sure there is a white space character later in the string so that we can be reasonably sure this is the first name.

pattern = "(Dr.\s)?M.*\s"Output: Michael Mason Dr. Melissa Franklin

You may have noticed that there is a person with two doctor titles in the list whose first name also starts with an ‘M’ and that it is currently not captured by the pattern because the ? operator will match at most one occurrence of the group. By changing the ? to a * , we can match an arbitrary number of doctor titles.

pattern = "(Dr.\s)*M.*\s"Output: Michael Mason Dr. Melissa Franklin Dr. Dr. Matthew Malone

Similarly to how we have the if-else statement to realize case distinctions in addition to loop based repetitions in normal Python, regular expression can make use of the | character to define alternatives. For instance, (nn|ss) can be used to get all names that contain either the sequence “nn” or the sequence “ss” (or both):

pattern = ".*(nn|ss)"Output: Jennifer Johnson Susanne Walker Dr. Melissa Franklin

As we already mentioned, ^ and $ represent the beginning and end of a string, respectively. Let’s say we want to get all names from the list that end in “John”. This can be done using the following regular expression:

pattern = ".*John$"Output: Papa John

Here is a more complicated example. We want all names that contain “John” as a single word independent of whether “John” appears at the beginning, somewhere in the middle, or at the end of the name. However, we want to exclude cases in which“John” appears as part of longer word (like “Johnson”). A first idea could be to use ".*\sJohn\s"to achieve this making sure that there are whitespace characters before and after “John”. However, this will match neither “John Williams” nor “Papa John” because the beginning and end of the string are not whitespace characters. What we can do is use the pattern "(^|.*\s)John"to say that John needs to be preceded either by the beginning of the string or an arbitrary sequence of characters followed by a whitespace. Similarly, "John(\s|$)"requires that John be succeeded either by a whitespace or by the end of the string. Taken together we get the following regular expressions:

pattern = "(^|.*\s)John(\s|$)"Output: John Williams Papa John Walter John Miller

An alternative would be to use the regular expression "(.*\s)?John(\s.*)?$"That uses the optional operator ? rather than | . There are often several ways to express the same thing in a regular expression. Also, as you start to see here, the different special matching operators can be combined and nested to form arbitrarily complex regular expressions. You will practice writing regular expressions like this a bit more in the practice exercises and in the homework assignment.

In addition to the main special characters we explained in this section, there are certain extension operators available denoted as (?x...) where the x can be one of several special characters determining the meaning of the operator. We here just briefly want to mention the operator (?!...) for negative lookahead assertion because we will use it later in the lesson's walkthrough to filter files in a folder. Negative lookahead extension means that what comes before the (?!...) can only be matched if it isn't followed by the expression given for the ... . For instance, if we want to find all names that contain John but not followed by "son" as in "Johnson", we could use the following expression:

pattern = ".*John(?!son)"Output: John Williams Papa John Walter John Miller

If match(…) does not find a match, it will return the special value None. That’s why we can use it with an if-statement as we have been doing in all the previous examples. However, if a match is found it will not simply return True but a match object that can be used to get further information, for instance about which part of the string matched the pattern. The match object provides the methods group() for getting the matched part as a string, start() for getting the character index of the starting position of the match, end() for getting the character index of the end position of the match, and span() to get both start and end indices as a tuple. The example below shows how one would use the returned matching object to get further information and the output produced by its four methods for the pattern “John” matching the string “John Williams”:

pattern = "John"

compiledRE = re.compile(pattern)

for person in personList:

match = compiledRE.match(person)

if match:

print(match.group())

print(match.start())

print(match.end())

print(match.span())Output: John <- output of group() 0 <- output of start() 4 <- output of end() (0,4) <- output of span()

In addition to match(…), there are three more matching functions defined in the re module. Like match(…), these all exist as standalone functions taking a regular expression and a string as parameters, and as methods to be invoked for a compiled pattern. Here is a brief overview:

- search(…) - In contrast to match(…), search(…) tries to find matching locations anywhere within the string not just matches starting at the beginning. That means “^John” used with search(…) corresponds to “John” used with match(…), and “.*John” used with match(…) corresponds to “John” used with search(…). However, “corresponds” here only means that a match will be found in exactly the same cases but the output by the different methods of the returned matching object will still vary.

- findall(…) - In contrast to match(…) and search(…), findall(…) will identify all substrings in the given string that match the regular expression and return these matches as a list.

- finditer(…) – finditer(…) works like findall(…) but returns the matches found not as a list but as a so-called iterator object.

By now you should have enough understanding of regular expressions to cover maybe ~80 to 90% of the cases that you encounter in typical programming. However, there are quite a few additional aspects and details that we did not cover here that you may potentially need when dealing with rather sophisticated cases of regular-expression-based matching. The full documentation of the “re” package can be found here and is always a good source for looking up details when needed. In addition, this regex howto provides a good overview.

We also want to mention that regular expressions are very common in programming and matching with them is very efficient, but they do have certain limitations in their expression. For instance, you cannot define a regular pattern for all strings that are palindromes (words that read the same forward and backward) without some limitations. For these kinds of patterns, certain extensions to the concept of a regular expression are needed, or you can simply use Python's string manipulation features. One generalization of regular expressions are called recursive regular expressions. The regex Python package currently under development, backward compatible to re, and planned to replace re at some point, has this capability, so feel free to check it out if you are interested in this topic.

3.4 Higher Order Functions and lambda expressions

3.4 Higher Order Functions and lambda expressions jmk649In this section, we are going to introduce a concept that functions can be used as parameters to other functions, similar to how other types of values like numbers, strings, or lists are used. Actually, you have already seen examples of this in previous lessons, such as In Lesson 1 with the pool.starmap(...) function and in Lesson 2 when passing the name of a function to the connect(...) method when connecting a signal to an event handler function. A function that takes other functions as arguments is often called a higher order function.

Let’s say you need to apply certain string manipulation functions to each string in a list of strings. You may want to convert the string items in the list to be all upper-case characters, all lower-case, or Capital Case characters, or apply some completely different conversion. The following example shows how one can use a single function for these cases, and then pass the function to apply to each list element as a parameter to this new function:

def applyToEachString(stringFunction, stringList):

myList = []

for item in stringList:

myList.append(stringFunction(item))

return myList

allUpperCase = applyToEachString(str.upper, ['Building', 'ROAD', 'tree'])

print(allUpperCase)As you can see, the function definition specifies two parameters; the first one is for passing a function that takes a string and returns either a new string from it or some other value. The second parameter is for passing along a list of strings. In line 7, we call our function with using str.upper for the first parameter and a list with three words for the second parameter. The word list intentionally uses different forms of capitalization. upper() is a string method that turns the string it is called for into all upper-case characters. Since this a method and not a function, we have to use the name of the class (str) as a prefix, so “str.upper”. It is important that there are no parentheses () after upper because that would mean that the function will be called immediately and only its return value would be passed to applyToEachString(…).

In the function body, we simply create an empty list in variable myList, go through the elements of the list that is passed in parameter stringList, and then in line 4 call the function that is passed in parameter stringFunction to an element from the list. The result is appended to list myList and, at the end of the function, we return that list with the modified strings. The output you will get is the following:

['BUILDING', 'ROAD', 'TREE']If we now want to use the same function to turn everything into all lower-case characters, we just have to pass the name of the lower() function instead, like this:

allLowerCase = applyToEachString(str.lower, ['Building', 'ROAD', 'tree'])

print(allLowerCase)Output: ['building', 'road', 'tree']

You may at this point say that this is more complicated than using a simple list comprehension that does the same function, like:

[s.upper() for s in ['Building', 'ROAD', 'tree']]That is true in this case, but we are just creating some simple examples that are easy to understand here. One benefit of the complicated method is being able to add more string manipulations that could be hard to follow if done via list comprehension. Which method should you choose? It depends. If in doubt, choose the method that contains that is the most easily read and understood.

For converting all strings into strings that only have the first character capitalized, we first write our own function that does this for a single string. There actually is a string method called capitalize() that could be used for this, but let’s pretend it doesn’t exist to show how to use applyToEachString(…) with a self-defined function.

def capitalizeFirstCharacter(s):

return s[:1].upper() + s[1:].lower()

allCapitalized = applyToEachString(capitalizeFirstCharacter, ['Building', 'ROAD', 'tree'])

print(allCapitalized)Output: ['Building', 'Road', 'Tree']

The code for capitalizeFirstCharacter(…) is rather simple. It uses slicing [:1] to make the first character of the given string s and makes it upper-case, takes the rest of the string s[1:] and turns it into lower-case. Finally, the two pieces are concatenated together again.

In a case where the function you want to use as a parameter is very simple, such as performing a single expression, and you only need this function at this one place in your code, you can skip the function definition completely and use a lambda expression. A lambda expression (aka Anonymous function) defines a function without giving it a name using the format:

lambda <parameters> : <expression for the return value>For capitalizeFirstCharacter(…), the corresponding lambda expression would be this:

lambda s: s[:1].upper() + s[1:].lower()Note that the part after the colon does not contain a return statement; it is always just a single expression and the result from evaluating that expression automatically becomes the return value of the anonymous lambda function. That means that functions that require if-else or loops to compute the return value cannot be turned into lambda expression. When we integrate the lambda expression into our call of applyToEachString(…), the code looks like this:

allCapitalized = applyToEachString(lambda s: s[:1].upper() + s[1:].lower(), ['Building', 'ROAD', 'tree'])Lambda expressions can be used everywhere where the name of a function can appear, so, for instance, also within a list comprehension:

[(lambda s: s[:1].upper() + s[1:].lower())(s) for s in ['Building', 'ROAD', 'tree']]We here had to put the lambda expression into parenthesis and follow up with “(s)” to tell Python that the function defined in the expression should be called with the list comprehension variable s as parameter. There's a good first principles discussion on Lambda functions here at RealPython) that is worth reviewing.

So far, we have only used applyToEachString(…) to create a new list of strings, so the functions we used as parameters always were functions that take a string as input and return a new string. However, this is not required. We can just as well use a function that returns, for instance, numbers like the number of characters in a string as provided by the Python function len(…). Before looking at the code below, think about how you would write a call of applyToEachString(…) that does that!

Here is the solution.

wordLengths = applyToEachString(len, ['Building', 'ROAD', 'tree'])

print(wordLengths)len(…) is a function so we can simply put in its name as the first parameter. The output produced is the following list of numbers:

[8, 4, 4]

As shown in section 1.3.3, you can use Lambda with a dictionary to mimic the switch case construct when used with the .get() method:

getTask = {'daily': lambda: get_daily_tasks(),

'monthly': lambda: get_monthly_tasks(),

'weekly': lambda: get_weekly_tasks()}

task = monthly

getTask.get(task)()With what you have seen so far in this lesson the following code example should be easy to understand:

def applyToEachNumber(numberFunction, numberList):

l = []

for item in numberList:

l.append(numberFunction(item))

return l

roundedNumbers = applyToEachNumber(round, [12.3, 42.8])

print(roundedNumbers)Right, we just moved from a higher-order function that applies some other function to each element in a list of strings to one that does the same but for a list of numbers. We call this function with the round(...) function for rounding a floating point number. The output will be:

[12.0, 43.0]

If you compare the definition of the two functions applyToEachString(…) and applyToEachNumber(…), it is pretty obvious that they are exactly the same, we just slightly changed the names of the input parameters. The idea of these two functions can be generalized and then be formulated as “apply a function to each element in a list and build a list from the results of this operation” without making any assumptions about what type of values are stored in the input list. This kind of general higher-order function is already available in the Python standard library, map(…). We will go over this function along with two other popular higher-order functions: reduce(…) and filter(…).

Map

Like our more specialized versions, map(…) takes a function (or method) as the first input parameter and a list (or iterable) as the second parameter. It is the responsibility of the programmer using map(…) to make sure that the function provided as a parameter is able to work with the items stored in the provided list. In Python 3, a change to map(…) has been made so that it now returns a special map object rather than a simple list. However, whenever we need the result as a normal list, we can simply apply the list(…) function to the result like this:

l = list(map(function, iterable))

The three examples below show how we could have performed the conversion to upper-case and first character capitalization, and the rounding task with map(...) instead of using our own higher-order functions:

map(str.upper, ['Building', 'Road', 'Tree'])

map(lambda s: s[:1].upper() + s[1:].lower(), ['Building', 'ROAD', 'tree']) # uses lambda expression for only first character as upper-case

map(round, [12.3, 42.8])Map is actually more powerful than our own functions from above. It can take multiple lists as input together with a function that has the same number of input parameters as there are lists. It then applies that function to the first elements from all the lists, then to all second elements, and so on. We can use that to create a new list with the sums of corresponding elements from two lists as in the following example. The example code also demonstrates how we can use the different Python operators, like the + for addition with higher-order functions: The operator module from the standard Python library contains function versions of all the different operators that can be used for this purpose. The one for + is available as operator.add(...).

import operator

map(operator.add, [1,3,4], [4,5,6])Output: [5, 8, 10]

As a last example for map(...), let’s say you want to add a fixed number to each number in a single input list. The easiest way would be to use a lambda expression:

number = 11

map(lambda n: n + number, [1,3,4,7])Output: [12, 14, 15, 18]

Filter

The goal of the filter(…) higher-order function is to create a new list with only certain items from the original list that all satisfy some criterion by applying a boolean function to each element (a function that returns either True or False) and only keeping an element if that function returns True for that element.

Below, we provide two examples for this. One for a list of strings and another for a list of numbers. The first example uses a lambda expression that uses the string method startswith(…) to check whether or not a given string starts with the character ‘R’:

newList = filter(lambda s: s.startswith('R'), ['Building', 'ROAD', 'tree'])

print(newList)Output: ['ROAD']

In the second example, we use is_integer() from the float class to take only those elements from a list of floating point numbers that are integer numbers. Since this is a method, we need to use the class name as a prefix (“float.”):

newList = filter(float.is_integer, [12.4, 11.0, 17.43, 13.0])

print(newList)Output: [11.0, 13.0]

Reduce

The last higher-order function we are going to discuss here is reduce(…). In Python 3, it needs to be imported from the module functools. Its purpose is to combine (or “reduce”) all elements from a list into a single value by using an aggregation function taking two parameters that is used to combine the first and the second element, then the result with the third element, and so on until all elements from the list have been incorporated. The standard example for this is to sum up all values from a list of numbers. reduce(…) takes three parameters: (1) the aggregation function, (2) the list, and (3) an accumulator parameter. To understand this third parameter, think about how you would solve the task of summing up the numbers in a list with a for-loop. You would use a temporary variable initialized to zero and then add each number from that list to that variable which in the end would contain the final result. If you instead would want to compute the product of all numbers, you would do the same but initialize that variable to 1 and use multiplication instead of addition. The third parameter of reduce(…) is the value used to initialize this temporary variable. That should make it easy to understand the arguments used in the following two examples:

import operator

from functools import reduce

result = reduce(operator.add, [234,3,3], 0) # sum

print(result)Output: 240

import operator

from functools import reduce

result = reduce(operator.mul, [234,3,3], 1) # product

print(result)Output: 2106

Other things reduce(…) can be used for are computing the minimum or maximum value of a list of numbers or testing whether any or all values from a list of booleans are True. We will see some of these use cases in the practice exercises of this lesson. Examples of the higher-order functions discussed in this section will occasionally appear in the examples and walkthrough code of the remaining lessons.

3.5 Python for Data Science

3.5 Python for Data Science jmk649Python has firmly established itself as one of the main programming languages used in Data Science. There are many, freely available Python packages for working with all kinds of data and performing different kinds of analysis, from general statistics to very domain-specific procedures. The same holds true for spatial data that we are dealing with in typical GIS projects. There are various packages for importing and exporting data coming in different GIS formats into a Python project and manipulating, analyzing and visualizing the data with Python code--and you will get to know quite a few of these packages in this lesson. We provide a short overview on the packages we consider most important below.

In Data Science, one common principle is that projects should be cleanly and exhaustively documented, including all data used, how the data has been processed and analyzed, and the results of the analyses. The underlying point of view is that science should be easily reproducible to assure a high quality and to benefit future research as well as application in practice. One idea to achieve full transparency and reproducibility is to combine describing text, code, and analysis results into a single report that can be published, shared, and used by anyone to rerun the steps of the analysis.

In the Python world, such executable reports are very commonly created in the form of Jupyter Notebooks. Jupyter Notebook is an open-source web-based software tool that allows you to create documents that combine runnable Python code (and code from other languages as well), its output, as well as formatted text, images, etc.,. in a normal text document. Figure 3.1 shows you a brief part of a Jupyter Notebook, the one we are going to create in this lesson’s walkthrough.

While Jupyter Notebook has been developed within the Python ecosystem, it can be used with other programming languages. For instance, the R language that you may have experience in or heard about as one of the main languages used for statistical computing can be used in a Jupyter Notebook. One of the things you will see in this lesson is how one can actually combine Python and R code within a Jupyter notebook to realize a somewhat complex spatial data science project in the area of species distribution modeling, also termed ecological niche modeling.

It may be interesting for you to know that Esri is also supporting Jupyter Notebook as a platform for conducting GIS projects with the help of their ArcGIS API for Python library and Jupyter Notebook has been integrated into several Esri products including ArcGIS Pro.

After a quick look at the Python packages most commonly used in the context of data science projects, we will provide a more detailed overview on what is coming in the remainder of the lesson, so that you will be able to follow along easily without getting confused by all the different software packages we are going to use.

3.5.1 Python packages for (spatial) Data Science

3.5.1 Python packages for (spatial) Data Science mrs110It would be impossible to introduce or even just list all the packages available for conducting spatial data analysis projects in Python here, so the following is just a small selection of those that we consider most important.

numpy

numpy (Python numpy, Wikipedia numpy) stands for “Numerical Python” and is a library that adds support for efficiently dealing with large and multi-dimensional arrays and matrices to Python together with a large number of mathematical operations to apply to these arrays, including many matrix and linear algebra operations. Many other Python packages are built on top of the functionality provided by numpy.

matplotlib

matplotlib (Python matplotlib, Wikipedia matplot) is an example of a Python library that builds on numpy. Its main focus is on producing plots and embedding them into Python applications. Take a quick look at its Wikipedia page to see a few examples of plots that can be generated with matplotlib. We will be using matplotlib a few times in this lesson’s walkthrough to quickly create simple map plots of spatial data.

SciPy

SciPy (Python SciPy, Wikipedia SciPy) is a large Python library for application in mathematics, science, and engineering. It is built on top of both numpy and matplotlib, providing methods for optimization, integration, interpolation, signal processing and image processing. Together numpy, matplotlib, and SciPy roughly provide a similar functionality as the well known software Matlab. While we won’t be using SciPy in this lesson, it is definitely worth checking out if you're interested in advanced mathematical methods.

pandas

pandas (Python pandas, Wikipedia pandas software) provides functionality to efficiently work with tabular data, so-called data frames, in a similar way as this is possible in R. Reading and writing tabular data, e.g. to and from .csv files, manipulating and subsetting data frames, merging and joining multiple data frames, and time series support are key functionalities provided by the library. A more detailed overview on pandas will be given in Section 3.8.

Shapely

Shapely (Python Shapely, Shapely User Manual) adds the functionality to work with planar geometric features in Python, including the creation and manipulation of geometries such as points, polylines, and polygons, as well as set-theoretic analysis capabilities (intersection, union, …). It is based on the widely used GEOS library, the geometry engine that is used in PostGIS, which in turn is based on the Java Topology Suite (JTS) and largely follows the OGC’s Simple Features Access Specification.

geopandas

geopandas (Python geopandas, GeoPandas) combines pandas and Shapely to facilitate working with geospatial vector data sets in Python. While we will mainly use it to create a shapefile from Python, the provided functionality goes significantly beyond that and includes geoprocessing operations, spatial join, projections, and map visualizations.

GDAL/OGR

GDAL/OGR (Python GDAL page, GDAL/OGR Python) is a powerful library for working with GIS data in many different formats widely used from different programming languages. Originally, it consisted of two separated libraries, GDAL (‘Geospatial Data Abstraction Library‘) for working with raster data and OGR (used to stand for ‘OpenGIS Simple Features Reference Implementation’) for working with vector data, but these have now been merged. The gdal Python package provides an interface to the GDAL/OGR library written in C++. In Section 3.9 and the lesson’s walkthrough, you will see some examples of applying GDAL/OGR.

ArcGIS API for Python

As we already mentioned at the beginning, Esri provides its own Python API (ArcGIS for Python) for working with maps andd GIS data via their ArcGIS Online and Portal for ArcGIS (ArcGIS Enterprise) web platforms. The API allows for conducting administrative tasks, performing vector and raster analyses, running geocoding tasks, creating map visualizations, and more. While some services can be used autonomously, many are tightly coupled to Esri’s web platforms and you will at least need a free ArcGIS Online account. The Esri API for Python will be further discussed in Section 3.10.

3.5.2 The lesson in more detail

3.5.2 The lesson in more detail jmk649In this lesson, we will start to work with some software that you probably are not familiar with. We will be using Python packages extensively that we have not used before to demonstrate how a complex GIS project can be solved in Python by combining different languages and packages within a Jupyter Notebook. Therefore, it is probably a good idea to prepare you a bit with an overview of what will happen in the remainder of the lesson.

- We already discussed the idea of using Jupyter Notebooks for data analysis projects. We will start this part of the lesson by introducing you to Jupyter Notebook and explaining to you the basic functionality (Section 3.6) so that you will be able to use it for the remainder of the lesson and future Python projects.

- The R programming language has its roots in statistical computing but also comes with a large library of packages providing data analysis methods for many specialized areas. One such package is the ‘dismo’ package for species distribution modeling. We will use the task of generating a species distribution model for the Solanum Acaule plant species as the data analysis task for this lesson’s walkthrough with the goal of showing you how Python and R functions can be combined within a Jupyter Notebook to solve some pretty complex analysis problem. The species distribution modeling application will be discussed further together with a brief overview on R and the ‘dismo’ package in Section 3.7.

- Using pandas for the manipulation of tabular data will be a significant part of this lesson’s walkthrough. We will use it to clean up the somewhat messy observation data available for Solanum Acaule. As a preparation, we will teach you the basics of manipulating table data with pandas in Section 3.8.

- GDAL/OGR will be the main geospatial extension of Python that we will use in this lesson (a) to perform additional data cleaning based on spatial querying and (b) to prepare additional input data (raster data sets for different climatic variables). We provide an overview on its functionality and typical patterns of using GDAL/OGR in Section 3.9.

- We will mainly use the Esri ArcGIS API for Python to create an interactive map visualization within a Jupyter Notebook. However, the API has much more to offer and provides an interesting bridge between the FOSS Python Data Science ecosystem and the proprietary Esri world. We provide an overview of the API in Section 3.10.

- The lesson’s walkthrough in Section 3.11 will show you a solution to the task of creating a species distribution model for Solanum Acaule combining both Python and R and making use of the different Python packages introduced in the lesson. The walkthrough will be provided as a Jupyter Notebook that you can download and run on your own computer.

3.6 Jupyter Notebook

3.6 Jupyter Notebook jed124The idea of a Jupyter Notebook is that it can contain code, the output produced by the code, and rich text that, like in a normal text document, can be styled and include images, tables, equations, etc. Jupyter Notebook is a client-server application meaning that the core Jupyter program can be installed and run locally on your own computer or on a remote server. In both cases, you communicate with it via your web browser or within your IDE to create, edit, and execute your notebooks.

The history of Jupyter Notebook goes back to the year 2001 when Fernando Pérez started the development of IPython, a command shell for Python (and other languages) that provides interactive computing functionalities. In 2007, the IPython team started the development of a notebook system based on IPython for combining text, calculations, and visualizations, and a first version was released in 2011. In 2014, the notebook part was split off from IPython and became Project Jupyter, with IPython being the most common kernel (program component for running the code in a notebook) for Jupyter but not the only one. Various kernels now exist to provide programming language support for Jupyter notebooks for many common languages including Ruby, Perl, Java, C/C++, R, and Matlab.

To get a first impression of Jupyter Notebook have a look at Figure 3.2 (which you already saw earlier). The shown excerpt consists of two code cells with Python code (those with starting with “In [...]:“) and the output produced by running the code (“Out[...]:”), and of three different rich text cells before, after, and between the code cells with explanations of what is happening. The currently active cell is marked by the blue bar on the left and frame around it.

Before we continue to discuss what Jupyter Notebook has to offer, let’s get it running on your computer so that you can directly try out the examples.

3.6.1 Running Jupyter Notebook

3.6.1 Running Jupyter Notebook mrs110Juypter Notebook is already installed in the Anaconda environment (AC311_JNBK) we created in Section 3.2. If you have the Anaconda Navigator running, make sure it shows the “Home” page and that our environment (AC311_JNBK) is selected. Then you should see an entry for Juypter Notebook with a Launch button (Figure 3.3). Click the ‘Launch’ button to start up the application. This will ensure that your notebook starts with the correct environment. Starting Jupyter Notebook this way will create the link to shortcuts for a Jupyter Notebook to that conda environment so you can use it in the way we describe below.

Alternatively, you can start up Jupyter directly without having to open Anaconda first: You will find the Juypter Notebook application in your Windows application list as a subentry of Anaconda. Be sure that you start the Jupyter Notebook for the recently created conda environment (which will only be created if you change the dropdown in Anaconda Navigator above). Alternatively, simply press the Windows key and then type in the first few characters of Jupyter until Jupyter (with the correct conda environment) shows up in the search results.

When you start up Jupyter, two things will happen: The server component of the Jupyter application will start up in a Windows command line window showing log messages, e.g. that the server is running locally under the address http://localhost:8888/ (see Figure 3.4 (a)). When you start Jupyter from the Anaconda Navigator, this will actually happen in the background and you won't get to see the command line window with the server messages. In addition, the web-based client application part of Jupyter will open up in your standard web browser showing you the so-called Dashboard, the interface for managing your notebooks, creating new ones, and also managing the kernels. Right now it will show you the content of the default Jupyter home folder, which is your user’s home folder, in a file browser-like interface (Figure 3.4 (b)).

The file tree view allows you to navigate to existing notebooks on your disk, to open them, and to create new ones. Notebook files will have the file extension .ipynb . Let’s start by creating a new notebook file to try out the things shown in the next sections. Click the ‘New…’ button at the top right, then choose the ‘Python 3’ option. A new tab will open up in your browser showing an empty notebook page as in Figure 3.5.

Before we are going to explain how to edit and use the notebook page, please note that the page shows the title of the notebook above a menu bar and a toolbar that provide access to the main operations and settings. Right now, the notebook is still called ‘Untitled...’, so, as a last preparation step, let’s rename the notebook by clicking on the title at the top and typing in ‘MyFirstJupyterNotebook’ as the new title and then clicking the ‘Rename’ button (Figure 3.6).

If you go back to the still open ‘Home’ tab with the file tree view in your browser, you can see your new notebook listed as MyFirstJupyterNotebook.ipynb and with a green ‘Running’ tag indicating that this notebook is currently open. You can also click on the ‘Running’ tab at the top to only see the currently opened notebooks (the ‘Clusters’ tab is not of interest for us at the moment). Since we created this notebook in the Juypter root folder, it will be located directly in your user’s home directory. However, you can move notebook files around in the Windows File Explorer if, for instance, you want the notebook to be in your Documents folder instead. To create a new notebook directly in a subfolder, you would first move to that folder in the file tree view before you click the ‘New…’ button.

3.6.2 First steps to editing a Jupyter Notebook

3.6.2 First steps to editing a Jupyter Notebook mrs110We will now explain the basics of editing a Jupyter Notebook. We cannot cover all the details here, so if you enjoy working with Jupyter and want to learn all it has to offer as well as all the little tricks that make life easier, the following resources may serve as good starting points:

- Jupyter Documentation

- The Jupyter Notebook

- 28 Jupyter Notebook tips, tricks, and shortcuts

- Jupyter Notebook Tutorial: The Definitive Guide

A Jupyter notebook is always organized as a sequence of so called ‘cells’ with each cell either containing some code or rich text created using the Markdown notation approach (further explained in a moment). The notebook you created in the previous section currently consists of a single empty cell marked by a blue bar on the left that indicates that this is the currently active cell and that you are in ‘Command mode’. When you click into the corresponding text field to add or modify the content of the cell, the bar color will change to green indicating that you are now in ‘Edit mode’. Clicking anywhere outside of the text area of a cell will change back to ‘Command mode’.

Let’s start with a simple example for which we need two cells, the first one with some heading and explaining text and the second one with some simple Python code. To add a second cell, you can simply click on the  symbol. The new cell will be added below the first one and become the new active cell shown by the blue bar (and frame around the cell’s content). In the ‘Insert’ menu at the top, you will also find the option to add a new cell above the currently active one. Both adding a cell above and below the current one can also be done by using the keyboard shortcuts ‘A’ and ‘B’ while in ‘Command mode’. To get an overview on the different keyboard shortcuts, you can use Help -> Keyboard Shortcuts in the menu at the top.

symbol. The new cell will be added below the first one and become the new active cell shown by the blue bar (and frame around the cell’s content). In the ‘Insert’ menu at the top, you will also find the option to add a new cell above the currently active one. Both adding a cell above and below the current one can also be done by using the keyboard shortcuts ‘A’ and ‘B’ while in ‘Command mode’. To get an overview on the different keyboard shortcuts, you can use Help -> Keyboard Shortcuts in the menu at the top.

Both cells that we have in our notebook now start with “In [ ]:” in front of the text field for the actual cell content. This indicates that these are ‘Code’ cells, so the content will be interpreted by Jupyter as executable code. To change the type of the first cell to Markdown, select that cell by clicking on it, then change the type from ‘Code’ to ‘Markdown’ in the dropdown menu  in the toolbar at the top. When you do this, the “In [ ]:” will disappear and your notebook should look similar to Figure 3.8 below. The type of a cell can also be changed by using the keyboard shortcuts ‘Y’ for ‘Code’ and ‘M’ for ‘Markdown’ when in ‘Command mode’.

in the toolbar at the top. When you do this, the “In [ ]:” will disappear and your notebook should look similar to Figure 3.8 below. The type of a cell can also be changed by using the keyboard shortcuts ‘Y’ for ‘Code’ and ‘M’ for ‘Markdown’ when in ‘Command mode’.

Let’s start by putting some Python code into the second(!) cell of our notebook. Click on the text field of the second cell so that the bar on the left turns green and you have a blinking cursor at the beginning of the text field. Then enter the following Python code:

from bs4 import BeautifulSoup

import requests

documentURL = 'https://courses.ems.psu.edu/geog489/node/1703'

html = requests.get(documentURL).text

soup = BeautifulSoup(html, 'html.parser')

print(soup.get_text())This brief code example is similar to what you already saw in Lesson 2. It uses the requests Python package to read in the content of an html page from the URL that is provided in the documentURL variable. Then the package BeautifulSoup4 (bs4) is used for parsing the content of the file and we simply use it to print out the plain text content with all tags and other elements removed by invoking its get_text() method in the last line.

While Jupyter by default is configured to periodically autosave the notebook, this would be a good point to explicitly save the notebook with the newly added content. You can do this by clicking the disk  symbol or simply pressing ‘S’ while in ‘Command mode’. The time of the last save will be shown at the top of the document, right next to the notebook name. You can always revert back to the last previously saved version (also referred to as a ‘Checkpoint’ in Jupyter) using File -> Revert to Checkpoint. Undo with CTRL-Z works as expected for the content of a cell while in ‘Edit mode’; however, you cannot use it to undo changes made to the structure of the notebook such as moving cells around. A deleted cell can be recovered by pressing ‘Z’ while in ‘Command mode’ though.

symbol or simply pressing ‘S’ while in ‘Command mode’. The time of the last save will be shown at the top of the document, right next to the notebook name. You can always revert back to the last previously saved version (also referred to as a ‘Checkpoint’ in Jupyter) using File -> Revert to Checkpoint. Undo with CTRL-Z works as expected for the content of a cell while in ‘Edit mode’; however, you cannot use it to undo changes made to the structure of the notebook such as moving cells around. A deleted cell can be recovered by pressing ‘Z’ while in ‘Command mode’ though.

Now that we have a cell with some Python code in our notebook, it is time to execute the code and show the output it produces in the notebook. For this you simply have to click the run  symbol button or press ‘SHIFT+Enter’ while in ‘Command mode’. This will execute the currently active cell, place the produced output below the cell, and activate the next cell in the notebook. If there is no next cell (like in our example so far), a new cell will be created. While the code of the cell is being executed, a * will appear within the squared brackets of the “In [ ]:”. Once the execution has terminated, the * will be replaced by a number that always increases by one with each cell execution. This allows for keeping track of the order in which the cells in the notebook have been executed.

symbol button or press ‘SHIFT+Enter’ while in ‘Command mode’. This will execute the currently active cell, place the produced output below the cell, and activate the next cell in the notebook. If there is no next cell (like in our example so far), a new cell will be created. While the code of the cell is being executed, a * will appear within the squared brackets of the “In [ ]:”. Once the execution has terminated, the * will be replaced by a number that always increases by one with each cell execution. This allows for keeping track of the order in which the cells in the notebook have been executed.

Figure 3.9 below shows how things should look after you executed the code cell. The output produced by the print statement is shown below the code in a text field with a vertical scrollbar. We will later see that Jupyter provides the means to display other output than just text, such as images or even interactive maps.